SmartBugs: An Execution Framework for Automated Analysis of Smart Contracts

SmartBugs is a new execution framework that simplifies the execution of automated analysis tools on datasets of Solidity smart contracts.

SmartBugs currently supports 10 tools. It has a simple plugin system to easily add new analysis tools, based on Docker images. It supports parallel execution of the tools to speed up the execution time. It also normalizes the output that tools produce to facilitate experiments.

SmartBugs was developed in the context of an empirical review of automated analysis tools on 47,587 Ethereum smart contracts. The paper describing this review has been accepted to the ICSE 2020 Techical Papers Track and is now publicly available.

New datasets

There are two new datasets associated with SmartBugs:

- a dataset of 69 annotated vulnerable smart contracts that can be used to evaluate the precision of analysis tools (the number of contracts in this dataset has increased since the paper was published);

- a dataset with all the smart contracts in the Ethereum Blockchain that have Solidity source code available on Etherscan (a total of 47,518 contracts).

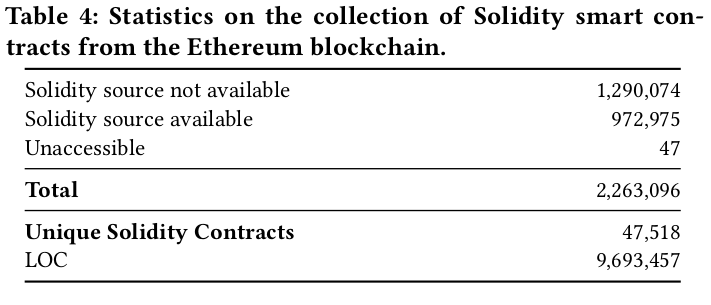

We actually considered 2,263,096 smart contract addresses. We then requested Etherscan for the Solidity source code of those contracts, and we obtained 972,975 Solidity files. This means that 1,290,074 of the contracts do not have an associated source file in Etherscan. The filtering process of removing duplicate contracts resulted in 47,518 unique contracts (a total of 9,693,457 lines). According to Etherscan, 47 contracts that we requested do not exist (we labelled those as Unaccessible).

Automated analysis tools

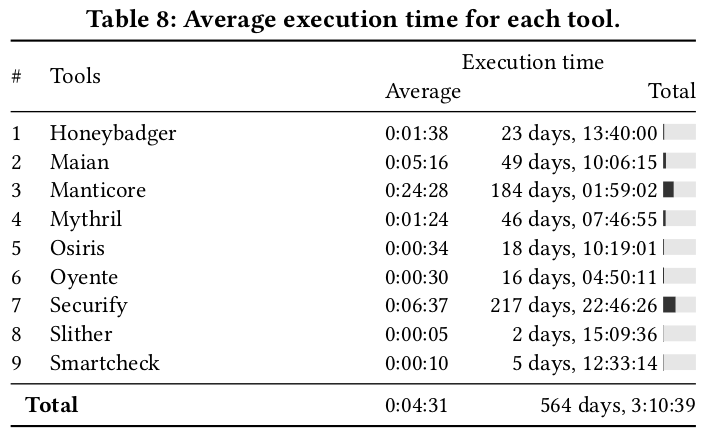

We used SmartBugs to execute 9 automated analysis tools on the two datasets. In total, we ran 428,337 analyses that took approximately 564 days and 3 hours, being the largest experimental setup to date both in the number of tools and in execution time.

The selected tools were: HoneyBadger, Maian, Manticore, Mythril, Osiris, Oyente, Securify, Slither, Smartcheck

Summary of findings

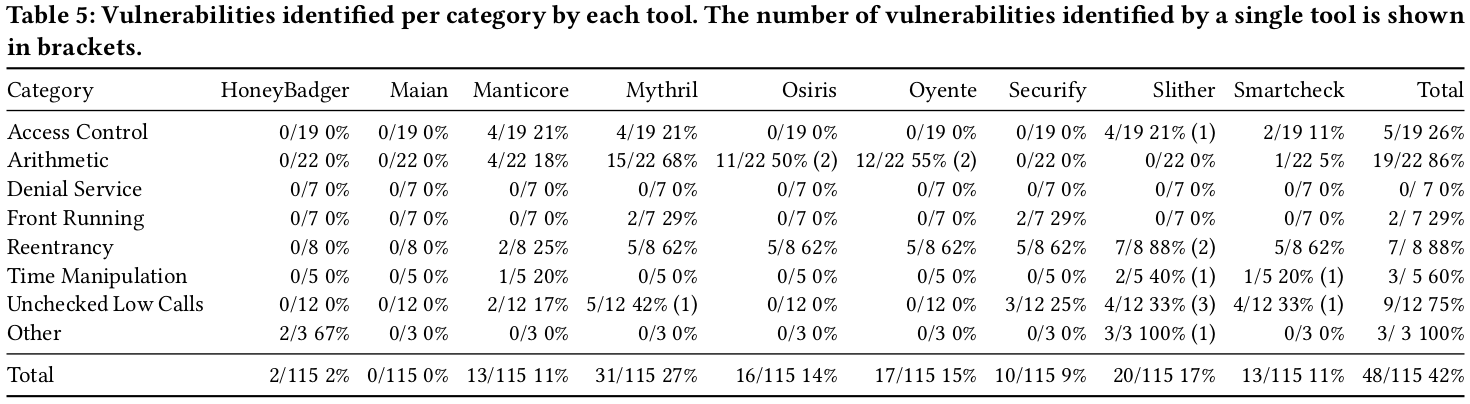

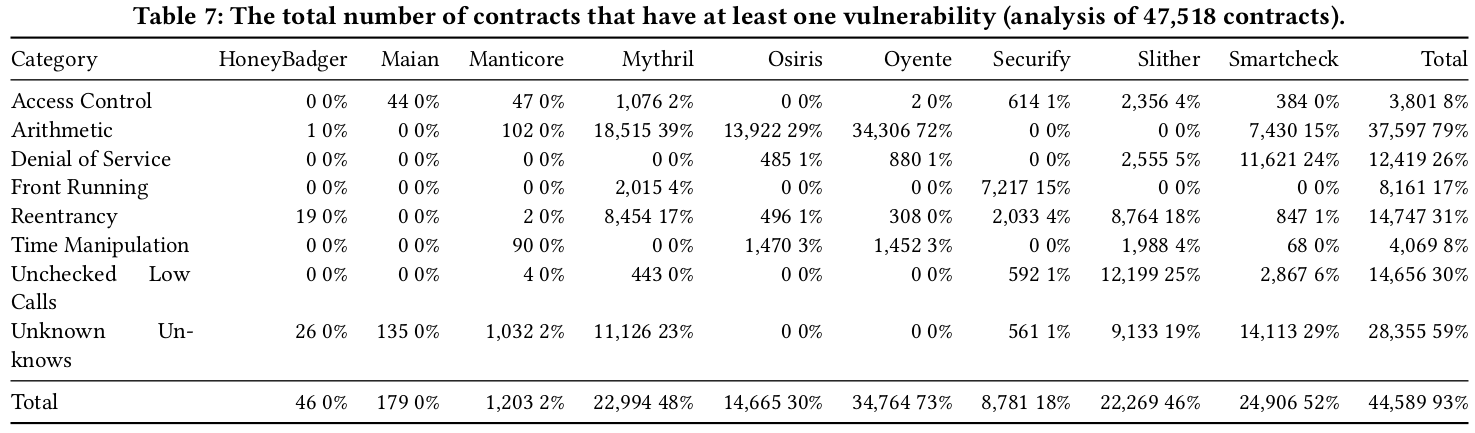

We found that only 42% of the vulnerabilities from our annotated dataset are detected by all the tools, with the tool Mythril having the higher accuracy (27%). When considering the largest dataset, we observed that 97% of contracts are tagged as vulnerable, thus suggesting a considerable number of false positives. Indeed, only a small number of vulnerabilities (and of only two categories) were detected simultaneously by four or more tools.

We observe that the tools underperform to detect vulnerabilities in the following three DASP10 categories: Access Control, Denial of service, and Front running. They are unable to detect by design vulnerabilities from Bad Randomness and Short Addresses categories.

We also observe that Mythril outperforms the other tools by the number of detected vulnerabilities (31/115, 27%) and by the number of vulnerability categories that it targets (5/9 categories). The combination of Mythril and Slither allows detecting a total of 42/115 (37%) vulnerabilities, which is the best trade-off between accuracy and execution costs.

The 9 tools identify vulnerabilities in 93% of the contracts, which suggests a high number of false positives. Oyente, alone, detects vulnerabilities in 73% of the contracts. By combining the tools to create a consensus, we observe that only a few number of vulnerabilities received a consensus of four or more tools: 937 for Arithmetic and 133 for Reentrancy.

On average, the tools take 4m31s to analyze one contract. However, the execution time largely varies between the tools. Slither is the fastest tool and takes on average only 5 seconds to analyze a contract. Manticore is the slowest tool. It takes on average 24m28s to analyze a contract. We also observe that the execution speed is not the only factor that impacts the performance of the tools. Securify took more time to execute than Maian, but Securify can easily be parallelized and therefore analyze the 47,518 contracts much faster than Maian. We have not observed a correlation between accuracy and execution time.

How it all started

Pedro Cruz, my first MSc student at IST started his project in September 2018 and successfully defended his thesis in October 2019. His project was on static analysis of Solidity smart contracts. He originally proposed to extend one of the existing static analysis tools, but we soon realised that there were not any public datasets that could be used to properly compare his efforts with existing research. As a result, the first step was to create an annotated dataset; we then had the idea of creating an executional framework that could simplify and automate the execution of analysis tools on datasets of smart contracts.

And there you go. All our artifacts are publicly available and we welcome contributions. If you don’t like SmartBugs command-line interface, we also have an experimental web dashboard.

Any questions, please let us know: @thodurieux @jff @rmaranhao @pedrocrvz